- PENTAHO DATA INTEGRATION LOOKS WEIRD CODE

- PENTAHO DATA INTEGRATION LOOKS WEIRD PLUS

- PENTAHO DATA INTEGRATION LOOKS WEIRD DOWNLOAD

PENTAHO DATA INTEGRATION LOOKS WEIRD DOWNLOAD

THIS ARTICLE IS STILL DEVELOPING … Download Pentaho Data Integration (PDI) Special thanks goes to Matt Casters for providing a lot of info for this article. It goes without saying that you must not use this plugin in production. Important: Kettle Beam Plugin is currently under heavy development and it’s only a few days old (). So here we are today looking at the rapidly evolving Kettle Beam project, that Matt Casters put a massive effort in (burning a lot of midngiht oil): Remember when PDI was the first drag and drop DI tool to support MapReduce? It was on a Slack chat that I suggested to him to go for Apache Beam, because having Beam support available within PDI would finally catapult it back to being a leading data integration tool - a position that it kind of had lost over the last few years. Matt Casters, the original creator of Kettle/PDI left Hitachi Vantara end of last year to start a new career with Neo4j and in the last few months he has been very active creating plugins for PDI that solve some longstanding shortcomings.

I supposed there must have been some good reasons on the Pentah/Hitachi Vantara side to go down their own route.

PENTAHO DATA INTEGRATION LOOKS WEIRD PLUS

Plus Apache Beam at the time already supported several execution engines.

It’s seemed quite obvious that this project would move on in a more rapid speed than one company alone could ever achieve. Whenever I ask around, there doesn’t seem anyone using AEL in production (which is not to say that there aren’t companies out there using AEL in production, but it is certainly not the adoption rate that I’d hoped for).įrom the start I never quite understood why one company would try to create an AEL by itself, considering the open source Apache Beam project was already a top level Apache project at the time with wide support from various companies. It has also taken several iteration to improve their AEL/get it production ready. From the start Spark as well as PDI’s own engine was supported and this is still the state today, although in some official slides you can see that the ambitions are to extend this Flink etc. About two years ago Pentaho’s/Hitachi Vantara’s own take on an adaptive execution layer (AEL) was added to PDI: The grand goal has been to design the DI process once and be able to run it via differenet engines.

PENTAHO DATA INTEGRATION LOOKS WEIRD CODE

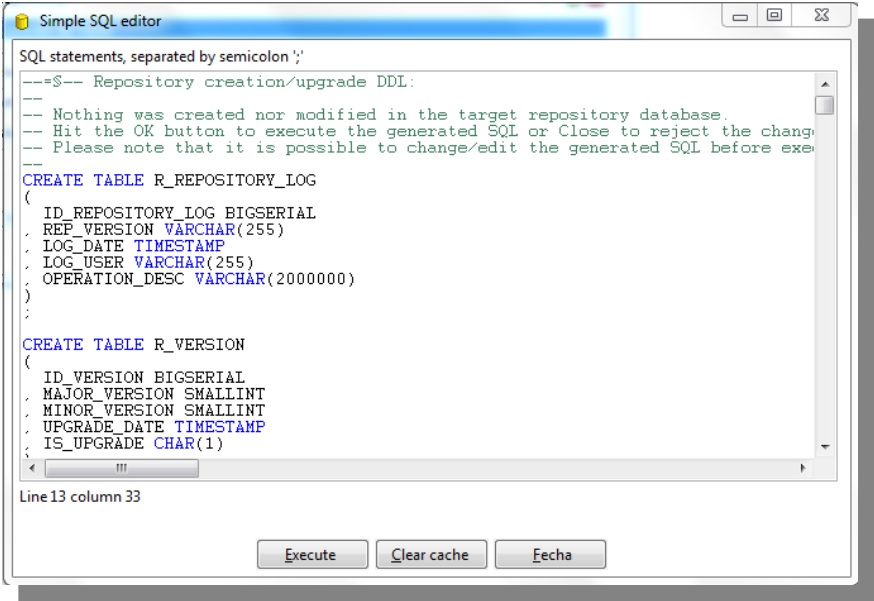

Pentaho Data Integration/Kettle has for a long time offered a convenient graphical interface to create data integration processes without the need for coding (although if someone ever wanted to code something, there is the option available).

0 kommentar(er)

0 kommentar(er)